Tuesday was the anniversary of my first real post on this blog. For the most part, I’ve tried to keep my content here on the technical side of things, but, what the hell, this is a good time to reflect on a year of blogging – what went well, what went poorly, and where I’m going from here.

Howdy. Today, I’m going to discuss rendering UI text using the SlimDX SpriteTextRenderer library. This is a very nifty and light-weight extension library for SlimDX, hosted on CodePlex. In older versions of DirectX, it used to be possible to easily render sprites and text using the ID3DXSprite and ID3DXFont interfaces, but those have been removed in newer versions of DirectX. I’ve experimented with some other approaches, such as using Direct2D and DirectWrite or the DirectX Toolkit, but wasn’t happy with the results. For whatever reason, Direct2D doesn’t interop well with DirectX 11, unless you create a shared DirectX 10 device and jump through a bunch of hoops, and even then it is kind of a PITA. Likewise, I have yet to find C# bindings for the DirectX Toolkit, so that’s kind of a non-starter for me; I’d either have to rewrite the pieces that I want to use with SlimDX, or figure out the marshaling to use the C++ dlls. So for that reason, the SpriteTextRenderer library seems to be my best option at the moment, and it turned out to be relatively simple to integrate into my application framework.

If you’ve used either the old DirectX 9 interfaces or XNA, then it’ll be pretty intuitive how to use SpriteTextRenderer. The SpriteRenderer class has some useful methods to draw 2D sprites, which I haven’t explored much yet, since I have already added code to draw scree-space quads. The TextBlockRenderer class provides some simple and handy methods to draw text up on the screen. Internally, it uses DirectWrite to generate sprite font textures at runtime, so you can use any installed system fonts, and specify the weight, style, and point size easily, without worrying about the nitty gritty details of creating the font.

One limitation of the TextBlockRenderer class is that you can only use an instance of it to render text with a single font. Thus, if you want to use different font sizes or styles, you need to create different instances for each font that you want to use. Because of this, I’ve written a simple manager class, which I’m calling FontCache, which will provide a central point to store all the fonts that are used, as well as a default font if you just want to throw some text up onto the screen.

The new code for rendering text has been added to my pathfinding demo, available at my GitHub repository, https://github.com/ericrrichards/dx11.git.

As I mentioned last time, I’m going to move on from fiddling with my Terrain class for a little while, and start working on some physics code instead. I bought a copy of Ian Millington’s Game Physics Engine Development some months ago and skimmed through it, but was too busy with other things to really get into the accompanying source code. Now, I do have some free cycles, so I’m planning on working through the examples from the book as my next set of posts.

Once again, the original source code is in C++, rather than C# as I’ll be using. Millington’s code also uses OpenGL and GLUT, rather than DirectX. Consequently, these aren’t going to be such straight ports like I did with most of Frank Luna’s examples; I’ll be porting the core physics code, and then for the examples, I’m just going to have to make something up that showcases the same features.

In any case, we’ll start off with the simple particle physics of Chapters 3 & 4, and build a demo that simulates the ballistics of firing some different types of projectiles. You can find my source for this example on my GitHub page, at https://github.com/ericrrichards/dx11.git.

Last time, we discussed the implementation of our A* pathfinding algorithm, as well as some commonly used heuristics for A*. Now we’re going to put all of the pieces together and get a working example to showcase this pathfinding work.

We’ll need to slightly rework our mouse picking code to return the tile in our map that was hit, rather than just the bounding box center. To do this, we’re going to need to modify our QuadTree, so that the leaf nodes are tagged with the MapTile that their bounding boxes enclose.

We’ll also revisit the function that calculates which portions of the map are connected, as the original method in Part 1 was horribly inefficient on some maps. Instead, we’ll use a different method, which uses a series of depth-first searches to calculate the connected sets of MapTiles in the map. This method is much faster, particularly on maps that have more disconnected sets of tiles.

We’ll also need to develop a simple class to represent our unit, which will allow it to update and render itself, as well as maintain pathfinding information. The unit class implementation used here is based in part on material presented in Chapter 9 of Carl Granberg’s Programming an RTS Game with Direct3D .

Finally, we’ll add an additional texture map to our rendering shader, which will draw impassible terrain using a special texture, so that we can easily see the obstacles that our unit will be navigating around. You can see this in the video above; the impassible areas are shown with a slightly darker texture, with dark rifts.

The full code for this example can be found on my GitHub repository, https://github.com/ericrrichards/dx11.git, under the 33-Pathfinding project.

In our previous installment, we discussed the data structures that we will use to represent the graph which we will use for pathfinding on the terrain, as well as the initial pre-processing that was necessary to populate that graph with the information that our pathfinding algorithm will make use of. Now, we are ready to actually implement our pathfinding algorithm. We’ll be using A*, probably the most commonly used graph search algorithm for pathfinding.

A* is one of the most commonly used pathfinding algorithms in games because it is fast, flexible, and relatively simple to implement. A* was originally a refinement of Dijkstra’s graph search algorithm. Dijkstra’s algorithm is guaranteed to determine the shortest path between any two nodes in a directed graph, however, because Dijkstra’s algorithm only takes into account the cost of reaching an intermediate node from the start node, it tends to consider many nodes that are not on the optimal path. An alternative to Dijkstra’s algorithm is Greedy Best-First search. Best-First uses a heuristic function to estimate the cost of reaching the goal from a given intermediate node, without reference to the cost of reaching the current node from the start node. This means that Best-First tends to consider far fewer nodes than Dijkstra, but is not guaranteed to produce the shortest path in a graph which includes obstacles that are not predicted by the heuristic.

A* blends these two approaches, by using a cost function (f(x)) to evaluate each node based on both the cost from the start node (g(x)) and the estimated cost to the goal (h(x)). This allows A* to both find the optimum shortest path, while considering fewer nodes than pure Dijkstra’s algorithm. The number of intermediate nodes expanded by A* is somewhat dependent on the characteristics of the heuristic function used. There are generally three cases of heuristics that can be used to control A*, which result in different performance characteristics:

For games, we will generally use heuristics of the third class. It is important that we generate good paths when doing pathfinding for our units, but it is generally not necessary that they be mathematically perfect; they just need to look good enough, and the speed savings are very important when we are trying to cram all of our rendering and update code into just a few tens of milliseconds, in order to hit 30-60 frames per second.

A* uses two sets to keep track of the nodes that it is operating on. The first set is the closed set, which contains all of the nodes that A* has previously considered; this is sometimes called the interior of the search. The other set is the open set, which contains those nodes which are adjacent to nodes in the closed set, but which have not yet been processed by the A* algorithm. The open set is generally sorted by the calculated cost of the node (f(x)), so that the algorithm can easily select the most promising new node to consider. Because of this, we usually consider the open list to be a priority queue. The particular implementation of this priority queue has a large impact on the speed of A*; for best performance, we need to have a data structure that supports fast membership checks (is a node in the queue?), fast removal of the best element in the queue, and fast insertions into the queue. Amit Patel provides a good overview of the pros and cons of different data structures for the priority queue on his A* page; I will be using a priority queue derived from Blue Raja’s Priority Queue class, which is essentially a binary heap. For our closed set, the primary operations that we will perform are insertions and membership tests, which makes the .Net HashSet<T> class a good choice.

This was originally intended to be a single post on pathfinding, but it got too long, and so I am splitting it up into three or four smaller pieces. Today,we’re going to look at the data structures that we will use to represent the nodes of our pathfinding graph, and generating that graph from our terrain class.

When we were working on our quadtree to detect mouse clicks on the terrain, we introduced the concept of logical terrain tiles; these were the smallest sections of the terrain mesh that we wanted to hit when we did mouse picking, representing a 2x2 portion of our fully-tessellated mesh. These logical terrain tiles are a good starting point for generating what I am going to call our map: the 2D grid that we will use for pathfinding, placing units and other objects, defining areas of the terrain, AI calculations, and so forth. At the moment, there isn’t really anything to these tiles, as they are simply a bounding box attached to the leaf nodes of our quad tree. That’s not terribly useful by itself, so we are going to create a data structure to represent these tiles, along with an array to contain them in our Terrain class. Once we have a structure to contain our tile information, we need to extract that information from our Terrain class and heightmap, and generate the graph representing the tiles and the connections between them, so that we can use it in our pathfinding algorithm.

The pathfinding code implemented here was originally derived from Chapter 4 of Carl Granberg’s Programming an RTS Game with Direct3D . I’ve made some heavy modifications, working from that starting point, using material from Amit Patel’s blog and BlueRaja’s C# PriorityQueue implementation. The full code for this example can be found on my GitHub repository, https://github.com/ericrrichards/dx11.git, under the 33-Pathfinding project.

I came across an interesting bug in the wrapper classes for my HLSL shader effects today. In preparation for creating a class to represent a game unit, for the purposes of demonstrating the terrain pathfinding code that I finished up last night, I had been refactoring my BasicModel and SkinnedModel classes to inherit from a common abstract base class, and after getting everything to the state that it could compile again, I had fired up the SkinnedModels example project to make sure everything was still rendering and updating correctly. I got called away to do something else, and ended up checking back in on it a half hour or so later, to find that the example had died with an OutOfMemoryException. Looking at Task Manager, this relatively small demo program was consuming over 1.5 GB of memory!

I restarted the demo, and watched the memory allocation as it ran, and noticed that the memory used seemed to be climbing quite alarmingly, 0.5-1 MB every time Task Manager updated. Somehow, I’d never noticed this before… So I started the project in Visual Studio, using the Performance Wizard to sample the .Net memory allocation, and let the demo run for a couple of minutes. Memory usage had spiked up to about 150MB, in this simple demo that loaded maybe 35 MB of textures, models, code and external libraries…

Howdy, time for an update. I’ve mostly gotten my terrain pathfinding code first cut completed; I’m creating the navigation graph, and I’ve got an implementation of A* finished that allows me to create a list of terrain nodes that represents the path between tile A and tile B. I’m going to hold off a bit on presenting all of that, since I haven’t yet managed to get a nice looking demo to show off the pathfinding yet. I need to do some more work to create a simple unit class that can follow the path generated by A*, and between work and life stuff, I haven’t gotten the chance to round that out satisfactorily yet.

I’ve also been doing some pretty heavy refactoring on various engine components, both for design and performance reasons. After the last series of posts on augmenting the Terrain class, and in anticipation of adding even more functionality as I added pathfinding support, I decided to take some time and split out the code that handles Direct3D resources and rendering from the more agnostic logical terrain representation. I’m not looking to do this at the moment, but this might also make implementing an OpenGL rendering system less painful, potentially.

Going through this, I don’t think I am done splitting things up. I’m kind of a fan of small, tightly focused classes, but I’m not necessarily an OOP junkie. Right now, I’m pretty happy with how I have split things out. I’ve got the Terrain class, which contains mostly the rendering independent logical terrain representation, such as the quad tree and picking code, the terrain heightmap and heightmap generation code, and the global terrain state properties (world-space size, initialization information struct, etc). The rendering and DirectX resource management code has been split out into the new TerrainRenderer class, which does all of the drawing and creates all of the DirectX vertex buffers and texture resources.

I’ll spare you all the intermediate gyrations that this refactoring push put me through, and just post the resulting two classes. Resharper was invaluable in this process; if you have access to a full version of Visual Studio, I don’t think there is a better way to spend $100. I shiver to think of how difficult this would have been without access to its refactoring and renaming tools.

Typically, in a strategy game, in addition to the triangle mesh that we use to draw the terrain, there is an underlying logical representation, usually dividing the terrain into rectangular or hexagonal tiles. This grid is generally what is used to order units around, construct buildings, select targets and so forth. To do all this, we need to be able to select locations on the terrain using the mouse, so we will need to implement terrain/mouse-ray picking for our terrain, similar to what we have done previously, with model triangle picking.

We cannot simply use the same techniques that we used earlier for our terrain, however. For one, in our previous example, we were using a brute-force linear searching technique to find the picked triangle out of all the triangles in the mesh. That worked in that case, however, the mesh that we were trying to pick only contained 1850 triangles. I have been using a terrain in these examples that, when fully tessellated, is 2049x2049 vertices, which means that our terrain consists of more than 8 million triangles. It’s pretty unlikely that we could manage to use the same brute-force technique with that many triangles, so we need to use some kind of space partitioning data structure to reduce the portion of the terrain that we need to consider for intersection.

Additionally, we cannot really perform a per-triangle intersection test in any case, since our terrain uses a dynamic LOD system. The triangles of the terrain mesh are only generated on the GPU, in the hull shader, so we don’t have access to the terrain mesh triangles on the CPU, where we will be doing our picking. Because of these two constraints, I have decide on using a quadtree of axis-aligned bounding boxes to implement picking on the terrain. Using a quad tree speeds up our intersection testing considerably, since most of the time we will be able to exclude three-fourths of our terrain from further consideration at each level of the tree. This also maps quite nicely to the concept of a grid layout for representing our terrain, and allows us to select individual terrain tiles fairly efficiently, since the bounding boxes at the terminal leaves of the tree will thus encompass a single logical terrain tile. In the screenshot below, you can see how this works; the boxes drawn in color over the terrain are at double the size of the logical terrain tiles, since I ran out of video memory drawing the terminal bounding boxes, but you can see that the red ball is located on the upper-quadrant of the white bounding box containing it.

Minimaps are a common feature of many different types of games, especially those in which the game world is larger than the area the player can see on screen at once. Generally, a minimap allows the player to keep track of where they are in the larger game world, and in many games, particularly strategy and simulation games where the view camera is not tied to any specific player character, allow the player to move their viewing location more quickly than by using the direct camera controls. Often, a minimap will also provide a high-level view of unit movement, building locations, fog-of-war and other game specific information.

Today, we will look at implementing a minimap that will show us a birds-eye view of the our Terrain class. We’ll also superimpose the frustum for our main rendering camera over the terrain, so that we can easily see how much of the terrain is in view. We’ll also support moving our viewpoint by clicking on the minimap. All of this functionality will be wrapped up into a class, so that we can render multiple minimaps, and place them wherever we like within our application window.

As always, the full code for this example can be downloaded from GitHub, at https://github.com/ericrrichards/dx11.git. The relevant project is the Minimap project. The implementation of this minimap code was largely inspired by Chapter 11 of Carl Granberg’s Programming an RTS Game with Direct3D, particularly the camera frustum drawing code. If you can find a copy (it appears to be out of print, and copies are going for outrageous prices on Amazon…), I would highly recommend grabbing it.

Now that I’m finished up with everything that I wanted to cover from Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 , I want to spend some time improving the Terrain class that we introduced earlier. My ultimate goal is to create a two tiered strategy game, with a turn-based strategic level and either a turn-based or real-time tactical level. My favorite games have always been these kinds of strategic/tactical hybrids, such as (in roughly chronological order) Centurion: Defender of Rome, Lords of the Realm, Close Combat and the Total War series. In all of these games, the tactical combat is one of the main highlights of gameplay, and so the terrain that that combat occurs upon is very important, both aesthetically and for gameplay.

Or first step will be to incorporate some of the graphical improvements that we have recently implemented into our terrain rendering. We will be adding shadow-mapping and SSAO support to the terrain in this installment. In the screenshots below, we have our light source (the sun) low on the horizon behind the mountain range. The first shot shows our current Terrain rendering result, with no shadows or ambient occlusion. In the second, shadows have been added, which in addition to just showing shadows, has dulled down a lot of the odd-looking highlights in the first shot. The final shot shows both shadow-mapping and ambient occlusion applied to the terrain. The ambient occlusion adds a little more detail to the scene; regardless of it’s accuracy, I kind of like the effect, just to noise up the textures applied to the terrain, although I may tweak it a bit to lighten the darker spots up a bit.

We are going to need to add another set of effect techniques to our shader effect, to support shadow mapping, as well as a technique to draw to the shadow map, and another technique to draw the normal/depth map for SSAO. For the latter two techniques, we will need to implement a new hull shader, since I would like to have the shadow maps and normal-depth maps match the fully-tessellated geometry; using the normal hull shader that dynamically tessellates may result in shadows that change shape as you move around the map. For the normal/depth technique, we will also need to implement a new pixel shader. Our domain shader is also going to need to be updated, so that it create the texture coordinates for sampling both the shadow map and the ssao map, and our pixel shader will need to be updated to do the shadow and ambient occlusion calculations.

This sounds like a lot of work, but really, it is mostly a matter of adapting what we have already done. As always, you can download my full code for this example from GitHub at https://github.com/ericrrichards/dx11.git. This example doesn’t really have a stand-alone project, as it came about as I was on my way to implementing a minimap, and thus these techniques are showcased as part of the Minimap project.

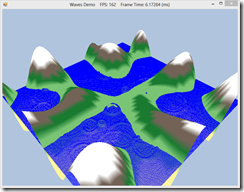

Quite a while back, I presented an example that rendered water waves by computing a wave equation and updating a polygonal mesh each frame. This method produced fairly nice graphical results, but it was very CPU-intensive, and relied on updating a vertex buffer every frame, so it had relatively poor performance.

We can use displacement mapping to approximate the wave calculation and modify the geometry all on the GPU, which can be considerably faster. At a very high level, what we will do is render a polygon grid mesh, using two height/normal maps that we will scroll in different directions and at different rates. Then, for each vertex that we create using the tessellation stages, we will sample the two heightmaps, and add the sampled offsets to the vertex’s y-coordinate. Because we are scrolling the heightmaps at different rates, small peaks and valleys will appear and disappear over time, resulting in an effect that looks like waves. Using different control parameters, we can control this wave effect, and generate either a still, calm surface, like a mountain pond at first light, or big, choppy waves, like the ocean in the midst of a tempest.

This example is based off of the final exercise of Chapter 18 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 . The original code that inspired this example is not located with the other example for Chapter 18, but rather in the SelectedCodeSolutions directory. You can download my source code in full from https://github.com/ericrrichards/dx11.git, under the 29-WavesDemo project. One thing to note is that you will need to have a DirectX 11 compatible video card to execute this example, as we will be using tessellation stage shaders that are only available in DirectX 11.

In real-time lighting applications, like games, we usually only calculate direct lighting, i.e. light that originates from a light source and hits an object directly. The Phong lighting model that we have been using thus far is an example of this; we only calculate the direct diffuse and specular lighting. We either ignore indirect light (light that has bounced off of other objects in the scene), or approximate it using a fixed ambient term. This is very fast to calculate, but not terribly physically accurate. Physically accurate lighting models can model these indirect light bounces, but are typically too computationally expensive to use in a real-time application, which needs to render at least 30 frames per second. However, using the ambient lighting term to approximate indirect light has some issues, as you can see in the screenshot below. This depicts our standard skull and columns scene, rendered using only ambient lighting. Because we are using a fixed ambient color, each object is rendered as a solid color, with no definition. Essentially, we are making the assumption that indirect light bounces uniformly onto all surfaces of our objects, which is often not physically accurate.

Naturally, some portions of our scene will receive more indirect light than other portions, if we were actually modeling the way that light bounces within our scene. Some portions of the scene will receive the maximum amount of indirect light, while other portions, such as the nooks and crannies of our skull, should appear darker, since fewer indirect light rays should be able to hit those surfaces because the surrounding geometry would, realistically, block those rays from reaching the surface.

In a classical global illumination scheme, we would simulate indirect light by casting rays from the object surface point in a hemispherical pattern, checking for geometry that would prevent light from reaching the point. Assuming that our models are static, this could be a viable method, provided we performed these calculations off-line; ray tracing is very expensive, since we would need to cast a large number of rays to produce an acceptable result, and performing that many intersection tests can be very expensive. With animated models, this method very quickly becomes untenable; whenever the models in the scene move, we would need to recalculate the occlusion values, which is simply too slow to do in real-time.

Screen-Space Ambient Occlusion is a fast technique for approximating ambient occlusion, developed by Crytek for the game Crysis. We will initially draw the scene to a render target, which will contain the normal and depth information for each pixel in the scene. Then, we can sample this normal/depth surface to calculate occlusion values for each pixel, which we will save to another render target. Finally, in our usual shader effect, we can sample this occlusion map to modify the ambient term in our lighting calculation. While this method is not perfectly realistic, it is very fast, and generally produces good results. As you can see in the screen shot below, using SSAO darkens up the cavities of the skull and around the bases of the columns and spheres, providing some sense of depth.

The code for this example is based on Chapter 22 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 . The example presented here has been stripped down considerably to demonstrate only the SSAO effects; lighting and texturing have been disabled, and the shadow mapping effects in Luna’s example have been removed. The full code for this example can be found at my GitHub repository, https://github.com/ericrrichards/dx11.git, under the SSAODemo2 project. A more faithful adaptation of Luna’s example can also be found in the 28-SsaoDemo project.

This weekend, I updated my home workstation from Windows 8 to Windows 8.1. Just before doing this, I had done a bunch of work on my SSAO implementation, which I was intending to write up here once I got back from a visit home to do some deer hunting and help my parents get their firewood in. When I got back, I fired up my machine, and loaded up VS to run the SSAO sample to grab some screenshots. Immediately, my demo application crashed, while trying to create the DirectX 11 device. I had done some work over the weekend to downgrade the vertex and pixel shaders in the example to SM4, so that they could run on my laptop, which has an older integrated Intel video card that only supports DX10.1. I figured that I had borked something up in the process, so I tried running some of my other, simpler demos. Same error message popped up; DXGI_ERROR_UNSUPPORTED. Now, I am running a GTX 560 TI, so I know Direct3D 11 should be supported.

However, I have been using Nvidia’s driver update tool to keep myself at the latest and greatest driver version, so I figured that perhaps the latest driver I downloaded had some bugs. Go to Nvidia’s site, check for any updates. Looks like I have the latest driver. Hmm…

So I turned again to google, trying to find some reason why I would suddenly be unable to create a DirectX device. The fourth result I found was this: http://stackoverflow.com/questions/18082080/d3d11-create-device-debug-on-windows-8-1. Apparently I need to download the Windows 8.1 SDK, now. I’m guessing that, since I had VS installed prior to updating, I didn’t get the latest SDK installed, and the Windows 8 SDK, which I did have installed, wouldn’t cut it anymore, at least when trying to create a debug device. So I went ahead and installed the 8.1 SDK from here. Restart VS, rebuild the project in question, and now it runs perfectly. Argh. At least it’s working again; I just wish I didn’t have to waste an hour futzing around with it…

Shadow mapping is a technique to cast shadows from arbitrary objects onto arbitrary 3D surfaces. You may recall that we implemented planar shadows earlier using the stencil buffer. Although this technique worked well for rendering shadows onto planar (flat) surfaces, this technique does not work well when we want to cast shadows onto curved or irregular surfaces, which renders it of relatively little use. Shadow mapping gets around these limitations by rendering the scene from the perspective of a light and saving the depth information into a texture called a shadow map. Then, when we are rendering our scene to the backbuffer, in the pixel shader, we determine the depth value of the pixel being rendered, relative to the light position, and compare it to a sampled value from the shadow map. If the computed value is greater than the sampled value, then the pixel being rendered is not visible from the light, and so the pixel is in shadow, and we do not compute the diffuse and specular lighting for the pixel; otherwise, we render the pixel as normal. Using a simple point sampling technique for shadow mapping results in very hard, aliased shadows: a pixel is either in shadow or lit; therefore, we will use a sampling technique known as percentage closer filtering (PCF), which uses a box filter to determine how shadowed the pixel is. This allows us to render partially shadowed pixels, which results in softer shadow edges.

This example is based on the example from Chapter 21 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 . The full source for this example can be downloaded from my GitHub repository at https://github.com/ericrrichards/dx11.git, under the ShadowDemos project.

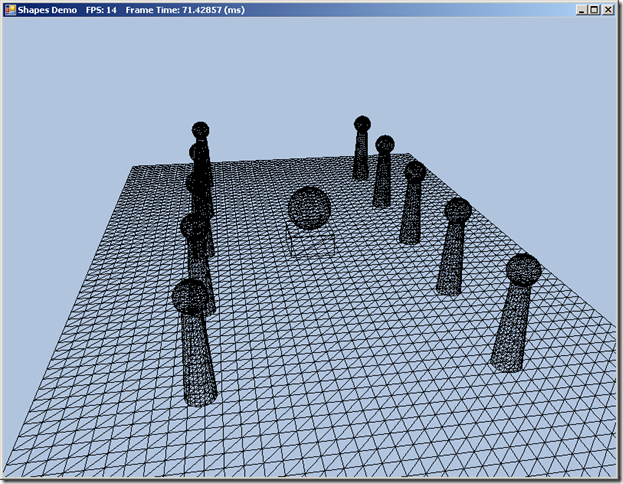

I had promised that we would move on to discussing shadows, using the shadow mapping technique. However, when I got back into the code I had written for that example, I realized that I was really sick of handling all of the geometry for our stock columns & skull scene. So I decided that, rather than manage all of the buffer creation and litter the example app with all of the buffer counts, offsets, materials and world transforms necessary to create our primitive meshes, I would take some time and extend the BasicModel class with some factory methods to create geometric models for us, and leverage the BasicModel class to encapsulate and manage all of that data. This cleans up the example code considerably, so that next time when we do look at shadow mapping, there will be a lot less noise to deal with.

The heavy lifting for these methods is already done; our GeometryGenerator class already does the work of generating the vertex and index data for these geometric meshes. All that we have left to do is massage that geometry into our BasicModel’s MeshGeometry structure, add a default material and textures, and create a Subset for the entire mesh. As the material and textures are public, we can safely initialize the model with a default material and null textures, since we can apply a different material or apply diffuse or normal maps to the model after it is created.

The full source for this example can be downloaded from my GitHub repository, at https://github.com/ericrrichards/dx11.git, under the ShapeModels project.

Particle systems are a technique commonly used to simulate chaotic phenomena, which are not easy to render using normal polygons. Some common examples include fire, smoke, rain, snow, or sparks. The particle system implementation that we are going to develop will be general enough to support many different effects; we will be using the GPU’s StreamOut stage to update our particle systems, which means that all of the physics calculations and logic to update the particles will reside in our shader code, so that by substituting different shaders, we can achieve different effects using our base particle system implementation.

The code for this example was adapted from Chapter 20 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 , ported to C# and SlimDX. The full source for the example can be found at my GitHub repository, at https://github.com/ericrrichards/dx11.git, under the ParticlesDemo project.

Below, you can see the results of adding two particles systems to our terrain demo. At the center of the screen, we have a flame particle effect, along with a rain particle effect.

Sorry for the hiatus, I’ve been very busy with work and life the last couple weeks. Today, we’re going to look at loading meshes with skeletal animations in DirectX 11, using SlimDX and Assimp.Net in C#. This will probably be our most complicated example yet, so bear with me. This example is inspired by Chapter 25 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 , although with some heavy modifications. Mr. Luna’s code uses a custom animation format, which I found less than totally useful; realistically, we would want to be able to load skinned meshes exported in one of the commonly used 3D modeling formats. To facilitate this, we will again use the .NET port of the Assimp library, Assimp.Net. The code I am using to load and interpret the animation and bone data is heavily based on Scott Lee’s Animation Importer code, ported to C#. The full source for this example can be found on my GitHub repository, at https://github.com/ericrrichards/dx11.git under the SkinnedModels project. The meshes used in the example are taken from the example code for Carl Granberg’s Programming an RTS Game with Direct3D .

Skeletal animation is the standard way to animate 3D character models. Generally, a character model will be represented by two structures: the exterior vertex mesh, or skin, and a tree of control points specifying the joints or bones that make up the skeleton of the mesh. Each vertex in the skin is associated with one or more bones, along with a weight that determines how much influence the bone should have on the final position of the skin vertex. Each bone is represented by a transformation matrix specifying the translation, rotation and scale that determines the final position of the bone. The bones are defined in a hierarchy, so that each bone’s transformation is specified relative to its parent bone. Thus, given a standard bipedal skeleton, if we rotate the upper arm bone of the model, this rotation will propagate to the lower arm and hand bones of the model, analogously to how our actual joints and bones work.

Animations are defined by a series of keyframes, each of which specifies the transformation of each bone in the skeleton at a given time. To get the appropriate transformation at a given time t, we linearly interpolate between the two closest keyframes. Because of this, we will typically store the bone transformations in a decomposed form, specifying the translation, scale and rotation components separately, building the transformation matrix at a given time from the interpolated components. A skinned model may contain many different animation sets; for instance, we’ll commonly have a walk animation, and attack animation, an idle animation, and a death animation.

The process of loading an animated mesh can be summarized as follows:

To draw the skinned model, we need to advance the animation to the correct frame, then pass the bone transforms to our vertex shader, where we will use the vertex indices and weights to transform the vertex position to the proper location.

So far, we have either worked with procedurally generated meshes, like our boxes and cylinders, or loaded very simple text-based mesh formats. For any kind of real application, however, we will need to have the capability to load meshes created by artists using 3D modeling and animation programs, like Blender or 3DS Max. There are a number of potential solutions to this problem; we could write an importer to read the specific file format of the program we are most likely to use for our engine. This does present a new host of problems, however: unless we write another importer, we are limited to just using models created with that modeling software, or we will have to rely on or create plugins to our modeling software to reformat models created using different software. There is a myriad of different 3D model formats in more-or-less common use, some of which are open-source, some of which are proprietary; many of both are surprisingly hard to find good, authoritative documentation on. All this makes the prospects of creating a general-purpose, cross-format model importer a daunting task.

Fortunately, there exists an open-source library with support for loading most of the commonly used model formats, ASSIMP, or Open Asset Import Library. Although Assimp is written in C++, there exists a port for .NET, AssimpNet, which we will be able to use directly in our code, without the hassle of wrapping the native Assimp library ourselves. I am not a lawyer, but it looks like both the Assimp and AssimpNet have very permissive licenses that should allow one to use them in any kind of hobby or professional project.

While we can use Assimp to load our model data, we will need to create our own C# model class to manage and render the model. For that, I will be following the example of Chapter 23 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 . This example will not be a straight conversion of his example code, since I will be ditching the m3d model format which he uses, and instead loading models from the standard old Microsoft DirectX X format. The models I will be using come from the example code for Chapter 4 of Carl Granberg’s Programming an RTS Game with Direct3D , although you may use any of the supported Assimp model formats if you want to use other meshes instead. The full source for this example can be found on my GitHub repository, at https://github.com/ericrrichards/dx11.git, under the AssimpModel project.

Previously, we have used our Terrain class solely with heightmaps that we have loaded from a file. Now, we are going to extend our Terrain class to generate random heightmaps as well, which will add variety to our examples. We will be using Perlin Noise, which is a method of generating naturalistic pseudo-random textures developed by Ken Perlin. One of the advantages of using Perlin noise is that its output is deterministic; for a given set of control parameters, we will always generate the same noise texture. This is desirable for our terrain generation algorithm because it allows us to recreate the same heightmap given the same initial seed value and control parameters.

Because we will be generating random heightmaps for our terrain, we will also need to generate an appropriate blendmap to texture the resulting terrain. We will be using a simple method that assigns the different terrain textures based on the heightmap values; a more complex simulation might model the effects of weather, temperature and moisture to assign diffuse textures, but simply using the elevation works quite well with the texture set that we are using.

The code for this example is heavily influenced by Chapter 4 of Carl Granberg’s Programming an RTS Game with Direct3D , adapted into C# and taking advantage of some performance improvements that multi-core CPUs on modern computers offer us. The full code for this example can be found on my GitHub repository, at https://github.com/ericrrichards/dx11.git, under the RandomTerrainDemo project. In addition to the random terrain generation code, we will also look at how we can add Windows Forms controls to our application, which we will use to specify the parameters to use to create the random terrain.

I know that I have been saying that I will cover random terrain generation in my next post for several posts now, and if all goes well, that post will be published today or tomorrow. First, though, we will talk about Direct2D, and using it to draw a loading screen, which we will display while the terrain is being generated to give some indication of the progress of our terrain generation algorithm.

Direct2D is a new 2D rendering API from Microsoft built on top of DirectX 10 (or DirectX 11 in Windows 8). It provides functionality for rendering bitmaps, vector graphics and text in screen-space using hardware acceleration. It is positioned as a successor to the GDI rendering interface, and, so far as I can tell, the old DirectDraw API from earlier versions of DirectX. According to the documentation, it should be possible to use Direct2D to render 2D elements to a Direct3D 11 render target, although I have had some difficulties in actually getting that use-case to work. It does appear to work excellently for pure 2D rendering, say for menu or loading screens, with a simpler syntax than using Direct3D with an orthographic projection.

We will be adding Direct2D support to our base application class D3DApp, and use Direct2D to render a progress bar with some status text during our initialization stage, as we load and generate the Direct3D resources for our scene. Please note that the implementation presented here should only be used while there is no active Direct3D rendering occurring; due to my difficulties in getting Direct2D/Direct3D interoperation to work correctly, Direct2D will be using a different backbuffer than Direct3D, so interleaving Direct2D drawing with Direct3D rendering will result in some very noticeable flickering when the backbuffers switch.

The inspiration for this example comes from Chapter 5 of Carl Granberg’s Programming an RTS Game with Direct3D , where a similar Progress screen is implemented using DirectX9 and the fixed function pipeline. With the removal of the ID3DXFont interface from newer versions of DirectX, as well as the lack of the ability to clear just a portion of a DirectX11 DeviceContext’s render target, a straight conversion of that code would require some fairly heavy lifting to implement in Direct3D 11, and so we will be using Direct2D instead. The full code for this example can be found on my GitHub repository, at https://github.com/ericrrichards/dx11.git, and is implemented in the TerrainDemo and RandomTerrainDemo projects.

Last time, we discussed terrain rendering, using the tessellation stages of the GPU to render the terrain mesh with distance-based LOD. That method required a DX11-compliant graphics card, since the Hull and Domain shader stages are new to Direct3D11. According to the latest Steam Hardware survey, nearly 65% of gamers have a DX11 graphics card, which is certainly the majority of potential users, and only likely to increase in the future. Of the remaining 35% of gamers, 31% are still using DX10 graphics cards. While we can safely ignore the small percentage of the market that is still limping along on DX9 cards (I myself still have an old laptop with a GeForce Go 7400 in my oldest laptop, but that machine is seven years old and on its last legs), restricting ourselves to only DX 11 cards cuts out a third of potential users of your application. For that reason, I’m going to cover an alternative, CPU-based implementation of our previous LOD terrain rendering example. If you have the option, I would suggest that you only bother with the previous DX11 method, as tessellating the terrain mesh yourself on the CPU is relatively more complex, prone to error, less performant, and produces a somewhat lower quality result; if you must support DX10 graphics cards, however, this method or one similar to it will do the job, while the hull/domain shader method will not.

We will be implementing this rendering method as an additional render path in our Terrain class, if we detect that the user has a DX10 compatible graphics card. This allows us to reuse a large chunk of the previous code. For the rest, we will adapt portions of the HLSL shader code that we previously implemented into C#, as well as use some inspirations from Chapter 4 of Carl Granberg’s Programming an RTS Game with Direct3D . The full code for this example can be found at my GitHub repository, https://github.com/ericrrichards/dx11.git, under the TerrainDemo project.

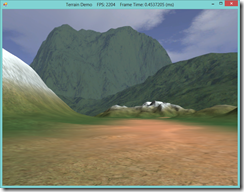

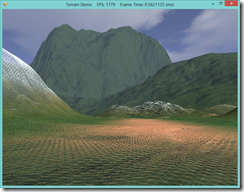

A common task for strategy and other games with an outdoor setting is the rendering of the terrain for the level. Probably the most convenient way to model a terrain is to create a triangular grid, and then perturb the y-coordinates of the vertices to match the desired elevations. This elevation data can be determined by using a mathematical function, as we have done in our previous examples, or by sampling an array or texture known as a heightmap. Using a heightmap to describe the terrain elevations allows us more fine-grain control over the details of our terrain, and also allows us to define the terrain easily, either using a procedural method to create random heightmaps, or by creating an image in a paint program.

Because a terrain can be very large, we will want to optimize the rendering of it as much as possible. One easy way to save rendering cycles is to only draw the vertices of the terrain that can be seen by the player, using frustum culling techniques similar to those we have already covered. Another way is to render the mesh using a variable level of detail, by using the Hull and Domain shaders to render the terrain mesh with more polygons near the camera, and fewer in the distance, in a manner similar to that we used for our Displacement mapping effect. Combining the two techniques allows us to render a very large terrain, with a very high level of detail, at a high frame rate, although it does limit us to running on DirectX 11 compliant graphics cards.

We will also use a technique called texture splatting to render our terrain with multiple textures in a single rendering call. This technique involves using a separate texture, called a blend map, in addition to the diffuse textures that are applied to the mesh, in order to define which texture is applied to which portion of the mesh.

The code for this example was adapted from Chapter 19 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 , with some additional inspirations from Chapter 4 of Carl Granberg’s Programming an RTS Game with Direct3D . The full source for this example can be downloaded from my GitHub repository, at https://github.com/ericrrichards/dx11.git, under the TerrainDemo project.

In our last example on normal mapping and displacement mapping, we made use of the new Direct3D 11 tessellation stages when implementing our displacement mapping effect. For the purposes of the example, we did not examine too closely the concepts involved in making use of these new features, namely the Hull and Domain shaders. These new shader types are sufficiently complicated that they deserve a separate treatment of their own, particularly since we will continue to make use of them for more complicated effects in the future.

The Hull and Domain shaders are covered in Chapter 13 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 , which I had previously skipped over. Rather than use the example from that chapter, I am going to use the shader effect we developed for our last example instead, so that we can dive into the details of how the hull and domain shaders work in the context of a useful example that we have some background with.

The primary motivation for using the tessellation stages is to offload work from the the CPU and main memory onto the GPU. We have already looked at a couple of the benefits of this technique in our previous post, but some of the advantages of using the tessellation stages are:

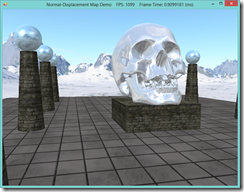

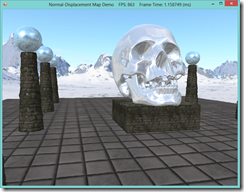

Today, we are going to cover a couple of additional techniques that we can use to achieve more realistic lighting in our 3D scenes. Going back to our first discussion of lighting, recall that thus far, we have been using per-pixel, Phong lighting. This style of lighting was an improvement upon the earlier method of Gourad lighting, by interpolating the vertex normals over the resulting surface pixels, and calculating the color of an object per-pixel, rather than per-vertex. Generally, the Phong model gives us good results, but it is limited, in that we can only specify the normals to be interpolated from at the vertices. For objects that should appear smooth, this is sufficient to give realistic-looking lighting; for surfaces that have more uneven textures applied to them, the illusion can break down, since the specular highlights computed from the interpolated normals will not match up with the apparent topology of the surface.

In the screenshot above, you can see that the highlights on the nearest column are very smooth, and match the geometry of the cylinder. However, the column has a texture applied that makes it appear to be constructed out of stone blocks, jointed with mortar. In real life, such a material would have all kinds of nooks and crannies and deformities that would affect the way light hits the surface and create much more irregular highlights than in the image above. Ideally, we would want to model those surface details in our scene, for the greatest realism. This is the motivation for the techniques we are going to discuss today.

One technique to improve the lighting of textured objects is called bump or normal mapping. Instead of just using the interpolated pixel normal, we will combine it with a normal sampled from a special texture, called a normal map, which allows us to match the per-pixel normal to the perceived surface texture, and achieve more believable lighting.

The other technique is called displacement mapping. Similarly, we use an additional texture to specify the per-texel surface details, but this time, rather than a surface normal, the texture, called a displacement map or heightmap, stores an offset that indicates how much the texel sticks out or is sunken in from its base position. We use this offset to modify the position of the vertices of an object along the vertex normal. For best results, we can increase the tessellation of the mesh using a domain shader, so that the vertex resolution of our mesh is as great as the resolution of our heightmap. Displacement mapping is often combined with normal mapping, for the highest level of realism.

This example is based off of Chapter 18 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 . You can download the full source for this example from my GitHub repository, athttps://github.com/ericrrichards/dx11.git, under the NormalDisplacementMaps project.

NOTE: You will need to have a DirectX 11 compatible video card in order to use the displacement mapping method presented here, as it makes use of the Domain and Hull shaders, which are new to DX 11.

Last time, we looked at using cube maps to render a skybox around our 3D scenes, and also how to use that sky cubemap to render some environmental reflections onto our scene objects. While this method of rendering reflections is relatively cheap, performance-wise and can give an additional touch of realism to background geometry, it has some serious limitations if you look at it too closely. For one, none of our local scene geometry is captured in the sky cubemap, so, for instance, you can look at our reflective skull in the center and see the reflections of the distant mountains, which should be occluded by the surrounding columns. This deficiency can be overlooked for minor details, or for surfaces with low reflectivity, but it really sticks out if you have a large, highly reflective surface. Additionally, because we are using the same cubemap for all objects, the reflections at any object in our scene are not totally accurate, as our cubemap sampling technique does not differentiate on the position of the environment mapped object in the scene.

The solution to these issues is to render a cube map, at runtime, for each reflective object using Direct3D. By rendering the cubemap for each object on the fly, we can incorporate all of the visible scene details, (characters, geometry, particle effects, etc) in the reflection, which looks much more realistic. This is, of course, at the cost of the additional overhead involved in rendering these additional cubemaps each frame, as we have to effectively render the whole scene six times for each object that requires dynamic reflections.

This example is based on the second portion of Chapter 17 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 , with the code ported to C# and SlimDX from the native C++ used in the original example. You can download the full source for this example from my GitHub repository, at https://github.com/ericrrichards/dx11.git, under the DynamicCubeMap project.

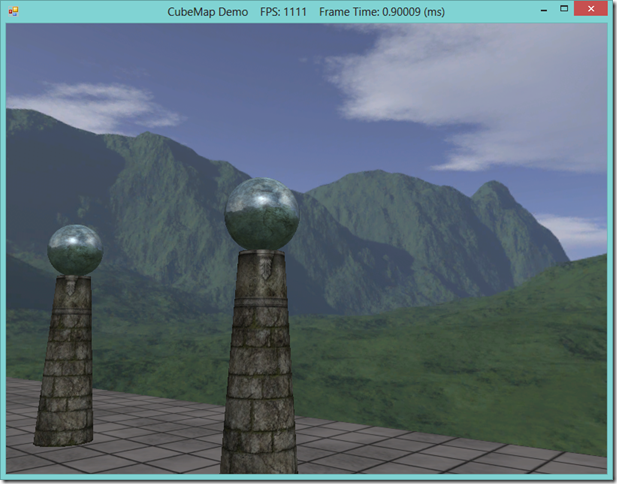

This time, we are going to take a look at a special class of texture, the cube map, and a couple of the common applications for cube maps, skyboxes and environment-mapped reflections. Skyboxes allow us to model far away details, like the sky or distant scenery, to create a sense that the world is more expansive than just our scene geometry, in an inexpensive way. Environment-mapped reflections allow us to model reflections on surfaces that are irregular or curved, rather than on flat, planar surfaces as in our Mirror Demo.

The code for this example is adapted from the first part of Chapter 17 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0. You can download the full source for this example from my GitHub repository, https://github.com/ericrrichards/dx11.git, under the CubeMap project.

|

| Our skull & columns scene, with a skybox and environment-mapped reflections on the column tops |

Hi everybody. This past week has been a rough one for me, as far as getting out any additional tutorials. Between helping my future sister-in-law move into a place in Cambridge, a deep sea fishing trip, a year-long project at work finally coming to a critical stage, and the release of Rome Total War II, it's been hard for me to find the hours to hammer out an article. Not to fear, next week should see an explosion of new content, as I am way ahead on the coding side. Expect articles on skyboxes and reflections using cube maps, normal and displacement mapping, terrain rendering with texture splatting and constant LOD, and loading meshes in commercial formats using Assimp.Net. Here are some teaser screenshots...

Using cube maps to render a skybox and reflections:

Real-time reflections using a dynamic cube map:

Normal and displacement mapping (click for full-size):

|

|

|

| Flat lighting | Normal mapped lighting | Normal mapped lighting and displacement mapped geometry |

Terrain rendering:

|

|

| Wire-frame mode, note the far hills are made up of far fewer polygons than the nearer details. |

Loading meshes with commercial file formats using Assimp.Net:

So far, we have only been concerned with drawing a 3D scene to the 2D computer screen, by projecting the 3D positions of objects to the 2D pixels of the screen. Often, you will want to perform the reverse operation; given a pixel on the screen, which object in the 3D scene corresponds to that pixel? Probably the most common application for this kind of transformation is selecting and moving objects in the scene using the mouse, as in most modern real-time strategy games, although the concept has other applications.

The traditional method of performing this kind of object picking relies on a technique called ray-casting. We shoot a ray from the camera position through the selected point on the near-plane of our view frustum, which is obtained by converting the screen pixel location into normalized device coordinates, and then intersect the resulting ray with each object in our scene. The first object intersected by the ray is the object that is “picked.”

The code for this example is based on Chapter 16 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 , with some modifications. You can download the full source from my GitHub repository at https://github.com/ericrrichards/dx11.git, under the PickingDemo project.

Today, we are going to reprise our Camera class from the Camera Demo. In addition to the FPS-style camera that we have already implemented, we will create a Look-At camera, a camera that remains focused on a point and pans around its target. This camera will be similar to the very basic camera we implemented for our initial examples (see the Colored Cube Demo). While our FPS camera is ideal for first-person type views, the Look-At camera can be used for third-person views, or the “birds-eye” view common in city-builder and strategy games. As part of this process, we will abstract out the common functionality that all cameras will share from our FPS camera into an abstract base camera class.

The inspiration for this example come both from Mr. Luna’s Camera Demo (Chapter 14 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 ), and the camera implemented in Chapter 5 of Carl Granberg’s Programming an RTS Game with Direct3D . You can download the full source for this example from my GitHub repository at https://github.com/ericrrichards/dx11.git under the CameraDemo project. To switch between the FPS and Look-At camera, use the F(ps) and L(ook-at) keys on your keyboard.

One of the main bottlenecks to the speed of a Direct3D application is the number of Draw calls that are issued to the GPU, along with the overhead of switching shader constants for each object that is drawn. Today, we are going to look at two methods of optimizing our drawing code. Hardware instancing allows us to minimize the overhead of drawing identical geometry in our scene, by batching the draw calls for our objects and utilizing per-instance data to avoid the overhead in uploading our per-object world matrices. Frustum culling enables us to determine which objects will be seen by our camera, and to skip the Draw calls for objects that will be clipped by the GPU during projection. Together, the two techniques reap a significant increase in frame rate.

The source code for this example was adapted from the InstancingAndCulling demo from Chapter 15 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 . Additionally, the frustum culling code for this example was adapted from Chapter 5 of Carl Granberg’s Programming an RTS Game with Direct3D (Luna’s implementation of frustum culling relied heavily on xnacollision.h, which isn’t really included in the base SlimDX). You can download the full source for this example from my GitHub repository at https://github.com/ericrrichards/dx11.git under the InstancingAndCulling project.

Up until now, we have been using a fixed, orbiting camera to view our demo applications. This style of camera works adequately for our purposes, but for a real game project, you would probably want a more flexible type of camera implementation. Additionally, thus far we have been including our camera-specific code directly in our main application classes, which, again, works, but does not scale well to a real game application. Therefore, we will be splitting out our camera-related code out into a new class (Camera.cs) that we will add to our Core library. This example maps to the CameraDemo example from Chapter 14 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 . The full code for this example can be downloaded from my GitHub repository, https://github.com/ericrrichards/dx11.git, under the CameraDemo project.

We will be implementing a traditional First-Person style camera, as one sees in many FPS’s and RPG games. Conceptually, we can think of this style of camera as consisting of a position in our 3D world, typically located as the position of the eyes of the player character, along with a vector frame-of-reference, which defines the direction the character is looking. In most cases, this camera is constrained to only rotate about its X and Y axes, thus we can pitch up and down, or yaw left and right. For some applications, such as a space or aircraft simulation, you would also want to support rotation on the Z (roll) axis. Our camera will support two degrees of motion; back and forward in the direction of our camera’s local Z (Look) axis, and left and right strafing along our local X (Right) axis. Depending on your game type, you might also want to implement methods to move the camera up and down on its local Y axis, for instance for jumping, or climbing ladders. For now, we are not going to implement any collision detection with our 3D objects; our camera will operate very similarly to the Half-Life or Quake camera when using the noclip cheat.

Our camera class will additionally manage its view and projection matrices, as well as storing information that we can use to extract the view frustum. Below is a screenshot of our scene rendered using the viewpoint of our Camera class (This scene is the same as our scene from the LitSkull Demo, with textures applied to the shapes).

When I first learned about programming DirectX using shaders, it was back when DirectX 9 was the newest thing around. Back then, there were only two stages in the shader pipeline, the Vertex and Pixel shaders that we have been utilizing thus far. DirectX 10 introduced the geometry shader, which allows us to modify entire geometric primitives on the hardware, after they have gone through the vertex shader.

One application of this capability is rendering billboards. Billboarding is a common technique for rendering far-off objects or minor scene details, by replacing a full 3D object with a texture drawn to a quad that is oriented towards the viewer. This is much less performance-intensive, and for far-off objects and minor details, provides a good-enough approximation. As an example, many games use billboarding to render grass or other foliage, and the Total War series renders far-away units as billboard sprites (In Medieval Total War II, you can see this by zooming in and out on a unit; at a certain point, you’ll see the unit “pop”, which is the point where the Total War engine switches from rendering sprite billboards to rendering the full 3D model). The older way of rendering billboards required one to maintain a dynamic vertex buffer of the quads for the billboards, and to transform the vertices to orient towards the viewer on the CPU whenever the camera moved. Dynamic vertex buffers have a lot of overhead, because it is necessary to re-upload the geometry to the GPU every time it changes, along with the additional overhead of uploading four vertices per billboard. Using the geometry shader, we can use a static vertex buffer of 3D points, with only a single vertex per billboard, and expand the point to a camera-aligned quad in the geometry shader.

We’ll illustrate this technique by porting the TreeBillboard example from Chapter 11 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0. This demo builds upon our previous Alpha-blending example, adding some tree billboards to the scene. You can download the full code for this example from my GitHub repository, at https://github.com/ericrrichards/dx11.git under the TreeBillboardDemo project.

In this post, we are going to discuss applications of the Direct3D stencil buffer, by porting the example from Chapter 10 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 to C# and SlimDX. We will create a simple scene, consisting of an object (in our case, the skull mesh that we have used previously), and some simple room geometry, including a section which will act as a mirror and reflect the rest of the geometry in our scene. We will also implement planar shadows, so that our central object will cast shadows on the rest of our geometry when it is blocking our primary directional light. The full code for this example can be downloaded from my GitHub repository, at https://github.com/ericrrichards/dx11.git, under the MirrorDemo project.

Last time, we covered some of the theory that underlies blending and distance fog. This time, we’ll go over the implementation of our demo that uses these effects, the BlendDemo. This will be based off of our previous demo, the Textured Hills Demo, with an added box mesh and a wire fence texture applied to demonstrate pixel clipping using an alpha map. We’ll need to update our Basic.fx shader code to add support for blending and clipping, as well as the fog effect, and we’ll need to define some new render states to define our blending operations. You can find the full code for this example at https://github.com/ericrrichards/dx11.git under the BlendDemo project.

This time around, we are going to dig into Chapter 9 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 . We will be implementing the BlendDemo in the next couple of posts. We will base this demo off our previous example, the Textured Hills Demo. We will be adding transparency to our water mesh, a fog effect, and a box with a wire texture, with transparency implemented using pixel shader clipping. We’ll need to update our shader effect, Basic.fx, to support these additional effects, along with its C# wrapper class. We’ll also look at additional DirectX blend and rasterizer states, and build up a static class to manage our these for us, in the same fashion we used for our InputLayouts and Effects. The full code for this example can be found on GitHub at https://github.com/ericrrichards/dx11.git, under the BlendDemo project. Before we can get to the demo code, we need to cover some of the basics first, however.

We’re going to wrap up our exploration of Chapter 8 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 by implementing one of the exercises from the end of the chapter. This exercise asks us to render a cube, similar to our Crate Demo, but this time to show a succession of different textures, in order to create an animation, similar to a child’s flip book. Mr. Luna suggests that we simply load an array of separate textures and swap them based on our simulation time, but we are going to go one step beyond, and implement a texture atlas, so that we will have all of the frames of animation composited into a single texture, and we can select the individual frames by changing our texture coordinate transform matrix. We’ll wrap this functionality up into a little utility class that we can then reuse.

This time around, we are going to revisit our old friend, the Waves Demo, and add textures to the land and water meshes. We will also be taking advantage of the gTexTransform matrix of our Basic.fx shader to tile our land texture multiple times across our mesh, to achieve more detail, and use tiling and translations on on our water mesh texture to create a simple but very visually appealing animation for our waves. This demo corresponds to the TexturedHillsAndWaves demo from Chapter 8 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 . You can download the full source for this example from my GitHub repository at https://github.com/ericrrichards/dx11.git.

Here is a still of our finished project this time:

I’m also going to try to upload a video of this demo in action, as the static screenshot doesn’t quite do justice:

This time around, we are going to begin with a simple texturing example. We’ll draw a simple cube, and apply a crate-style texture to it. We’ll need to make some changes to our Basic.fx shader code, as well as the C# wrapper class, BasicEffect. Lastly, we’ll need to create a new vertex structure, which will contain, in addition to the position and normal information we have been using, a uv texture coordinate. If you are following along in Mr. Luna’s book , this would be Chapter 8, the Crate Demo. You can see my full code for the demo at https://github.com/ericrrichards/dx11.git, under DX11/CrateDemo.

This time, we are going to take the scene that we used for the Shapes Demo, and apply a three-point lighting shader. We’ll replace the central sphere from the scene with the skull model that we loaded from a file in the Skull Demo, to make the scene a little more interesting. We will also do some work encapsulating our shader in a C# class, as we will be using this shader effect as a basis that we will extend when we look at texturing, blending and other effects. As always, the full code for this example can be found at my Github repository https://github.com/ericrrichards/dx11.git; the project for this example can be found in the DX11 solution under Examples/LitSkull.

| Rendering the LitSkull scene with 1 light (key lit) | Rendering the LitSkull scene with 2 lights (key and fill lit) | Rendering the LitSkull scene with 3 lights (key, fill and back lit) |

Last time, we ran through a whirlwind tour of lighting and material theory, and built up our data structures and shader functions for our lighting operations. This time around, we’ll get to actually implementing our first lighting demo. We’re going to take the Waves Demo that we covered previously and light it with a directional light, a point light that will orbit the scene, and a spot light that will focus on our camera’s view point. On to the code!

Last time, we finished up our exploration of the examples from Chapter 6 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 . This time, and for the next week or so, we’re going to be adding lighting to our repertoire. There are only two demo applications on tap in this chapter, but they are comparatively meatier, so I may take a couple posts to describe each one.

First off, we are going to modify our previous Waves Demo to render using per-pixel lighting,

as opposed to the flat color we used previously. Even without any more advanced techniques,

this gives us a much prettier visual.

To get there, we are going to have to do a lot of legwork to

implement this more realistic lighting model. We will need to implement three different

varieties of lights, a material class, and a much more advanced shader effect. Along the

way, we will run into a couple interesting and slightly aggravating differences between the way

one would do things in C++ and native DirectX and how we need to implement the same ideas in C#

with SlimDX. I would suggest that you follow along with my code from https://github.com/ericrrichards/dx11.git.

You will want to examine the Examples/LightingDemo and Core projects from the solution.

To get there, we are going to have to do a lot of legwork to

implement this more realistic lighting model. We will need to implement three different

varieties of lights, a material class, and a much more advanced shader effect. Along the

way, we will run into a couple interesting and slightly aggravating differences between the way

one would do things in C++ and native DirectX and how we need to implement the same ideas in C#

with SlimDX. I would suggest that you follow along with my code from https://github.com/ericrrichards/dx11.git.

You will want to examine the Examples/LightingDemo and Core projects from the solution.

Now that we have the demo framework constructed, we can move on to a more interesting example. This time, we are going to use the demo framework that we just developed to render a colored cube in DirectX 11, using a simple vertex and pixel shader. This example corresponds with the BoxDemo from Chapter 6 of Frank Luna’s Introduction to Game Programming with Direct3D 11.0 .

|

| Personal things... |

A while back I picked up Frank Luna's Introduction to 3D Game Programming with DirectX 11. I've been meaning to start going through it for some time, but some personal things have cut into my programming time significantly. I recently acquired a two year old Australian Cattle dog, and Dixie has kept me on the dead run for the weeks... It's very difficult to muster up the courage to dive into a programming project when you feel half zombified from getting up at 4:30 to let the dog out so she will stop her adorable yet frenzied licking attacks on your fiance.

But today, I'm going to start. I have not dealt with DirectX 11, nor 10 before; it's only in the last year or so that I built myself a modern desktop to replace my badly aging laptop from 2006. I do have some experience playing around with DirectX 9, so it will be interesting to see what has changed.

As I mentioned, I'll be going through Introduction to 3D Game Programming with DirectX 11 by Frank D. Luna. Atfirst glance, it seems very similar to his previous book, Introduction to 3D Game Programming with Direct X 9.0c: A Shader Approach , which I found to be very well-written, with easy to follow code examples. One wrinkle is that I'm going to be doing this in C#, with SlimDX, rather than in C++ and straight DirectX 11. At my job, I mostly write C#, and have developed a serious fondness for it. Additionally, I've found that attempting to port C++ code over to C# is a somewhat interesting exercise, and not a bad way to learn some of the darker and less-common corners of the C# standard libraries.

I plan on doing a post on each major example. So there may be a couple per chapter, and I don't know what kind of pace my schedule will allow me. If you're interested at looking at my code as I'm going along, you can clone my Git repository at https://github.com/ericrrichards/dx11.git. I'm not going to include the original book code (I couldn't find a license specifically for the code, and I'm struggling to parse the legalese on the inside cover of the book), but you can download it from the book's website at http://www.d3dcoder.net/d3d11.htm.