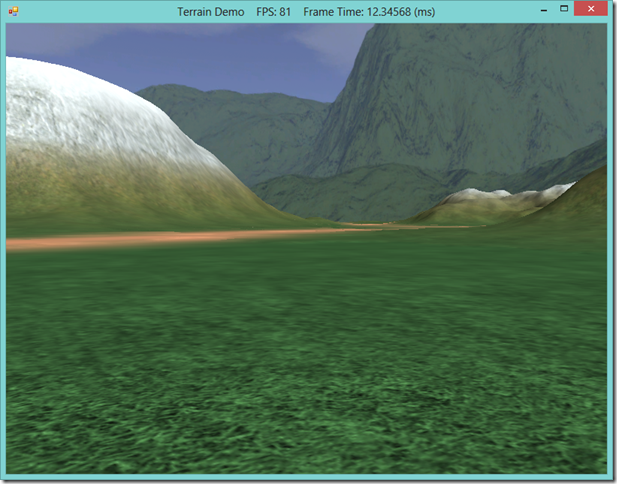

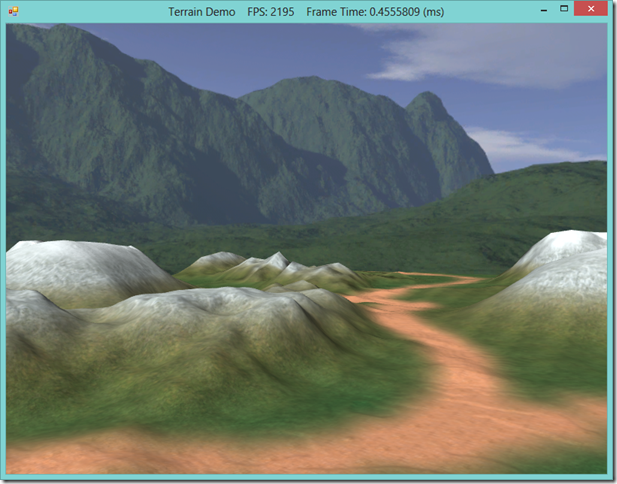

Last time, we discussed terrain rendering, using the tessellation stages of the GPU to render the terrain mesh with distance-based LOD. That method required a DX11-compliant graphics card, since the Hull and Domain shader stages are new to Direct3D11. According to the latest Steam Hardware survey, nearly 65% of gamers have a DX11 graphics card, which is certainly the majority of potential users, and only likely to increase in the future. Of the remaining 35% of gamers, 31% are still using DX10 graphics cards. While we can safely ignore the small percentage of the market that is still limping along on DX9 cards (I myself still have an old laptop with a GeForce Go 7400 in my oldest laptop, but that machine is seven years old and on its last legs), restricting ourselves to only DX 11 cards cuts out a third of potential users of your application. For that reason, I’m going to cover an alternative, CPU-based implementation of our previous LOD terrain rendering example. If you have the option, I would suggest that you only bother with the previous DX11 method, as tessellating the terrain mesh yourself on the CPU is relatively more complex, prone to error, less performant, and produces a somewhat lower quality result; if you must support DX10 graphics cards, however, this method or one similar to it will do the job, while the hull/domain shader method will not.

We will be implementing this rendering method as an additional render path in our Terrain class, if we detect that the user has a DX10 compatible graphics card. This allows us to reuse a large chunk of the previous code. For the rest, we will adapt portions of the HLSL shader code that we previously implemented into C#, as well as use some inspirations from Chapter 4 of Carl Granberg’s Programming an RTS Game with Direct3D . The full code for this example can be found at my GitHub repository, https://github.com/ericrrichards/dx11.git, under the TerrainDemo project.