OutOfMemoryException - Eliminating Temporary Allocations with Static Buffers in Effect Wrapper Code

I came across an interesting bug in the wrapper classes for my HLSL shader effects today. In preparation for creating a class to represent a game unit, for the purposes of demonstrating the terrain pathfinding code that I finished up last night, I had been refactoring my BasicModel and SkinnedModel classes to inherit from a common abstract base class, and after getting everything to the state that it could compile again, I had fired up the SkinnedModels example project to make sure everything was still rendering and updating correctly. I got called away to do something else, and ended up checking back in on it a half hour or so later, to find that the example had died with an OutOfMemoryException. Looking at Task Manager, this relatively small demo program was consuming over 1.5 GB of memory!

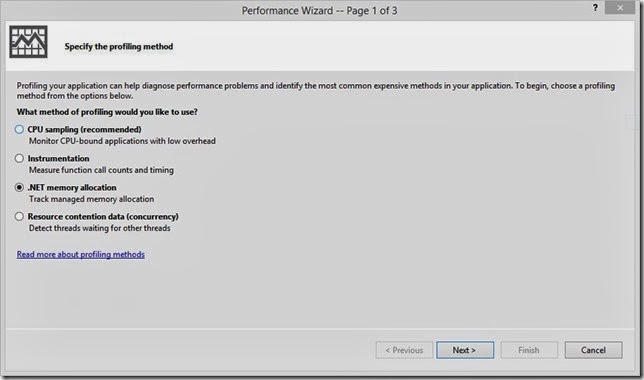

I restarted the demo, and watched the memory allocation as it ran, and noticed that the memory used seemed to be climbing quite alarmingly, 0.5-1 MB every time Task Manager updated. Somehow, I’d never noticed this before… So I started the project in Visual Studio, using the Performance Wizard to sample the .Net memory allocation, and let the demo run for a couple of minutes. Memory usage had spiked up to about 150MB, in this simple demo that loaded maybe 35 MB of textures, models, code and external libraries…

Looking through the performance dump, the vast majority of the memory allocated appeared to be Matrix[]’s and byte[]’s, allocated in Core.FX.BasicEffect, inside of SetBoneTransforms(), SetDirLights(), and SetMaterial(). Looking at the code, which is posted below, there were evidently a bunch of temporary arrays being allocated, either to hold the results of Util.GetArray(), or as a result of transforming the List of bone matrices into an array, in order to pass them into the GPU. I was under the assumption that these temporary arrays ought to be garbage collected, but after some googling around and trying to force the garbage collector to run, I was making no headway.

public void SetDirLights(DirectionalLight[] lights) { System.Diagnostics.Debug.Assert(lights.Length <= 3, "BasicEffect only supports up to 3 lights"); var array = new List<byte>(); foreach (var light in lights) { var d = Util.GetArray(light); // memory hotspot array.AddRange(d); // memory hotspot } _dirLights.SetRawValue(new DataStream(array.ToArray(), false, false), array.Count);// memory hotspot } public void SetMaterial(Material m) { var d = Util.GetArray(m);// memory hotspot _mat.SetRawValue(new DataStream(d, false, false), d.Length); } public void SetBoneTransforms(List<Matrix> bones) { _boneTransforms.SetMatrixArray(bones.ToArray());// memory hotspot }

At this point, I realized that the arrays of directional lights and bone matrices, at least, could be allocated once, and just updated, rather than recreated each time they change. The underlying HLSL shader only supports a maximum of 3 lights and 96 bones, so there was really no need to support arrays larger than these maximums. I realized that I could add additional statically allocated buffers, for the purposes of uploading the data to the GPU, and just copy over the passed-in arrays to this static buffer. The resulting code below ended up reducing my memory growth significantly. (DirectionalLight.Stride is the unmanaged byte size of my DirectionalLight structure, calculated and cached by using Marshal.SizeOf()).

public const int MaxLights = 3; private readonly byte[] _dirLightsArray = new byte[DirectionalLight.Stride*MaxLights]; public const int MaxBones = 96; private readonly Matrix[] _boneTransformsArray = new Matrix[MaxBones]; public void SetDirLights(DirectionalLight[] lights) { System.Diagnostics.Debug.Assert(lights.Length <= MaxLights, "BasicEffect only supports up to 3 lights"); for (int i = 0; i < lights.Length && i < MaxLights; i++) { var light = lights[i]; var d = Util.GetArray(light); Array.Copy(d, 0, _dirLightsArray, i*DirectionalLight.Stride, DirectionalLight.Stride ); } _dirLights.SetRawValue(new DataStream(_dirLightsArray, false, false), _dirLightsArray.Length); } public void SetBoneTransforms(List<Matrix> bones) { for (int i = 0; i < bones.Count && i < MaxBones; i++) { _boneTransformsArray[i] = bones[i]; } _boneTransforms.SetMatrixArray(_boneTransformsArray); }

This cut down on my memory growth problem significantly, but I wasn’t all the way there. Instead of seeing memory go up a couple megabytes every few seconds, I was now seeing growth of a few hundred kilobytes. Firing up the VS profiling tools again, I saw that I was still getting a lot of small allocations as a result of Util.GetArray, inside of SetDirLights() and SetMaterial(). GetArray() seemed to be the culprit, as it was following the same pattern of allocating a new byte array on each call.

public static byte[] GetArray(object o) { var len = Marshal.SizeOf(o); var arr = new byte[len]; // hotspot var ptr = Marshal.AllocHGlobal(len); Marshal.StructureToPtr(o, ptr, true); Marshal.Copy(ptr, arr, 0, len); Marshal.FreeHGlobal(ptr); return arr; }

I was a little more hesitant to use the same trick of statically allocating a buffer here, since I was somewhat concerned that I might be copying the unmanaged memory over to the static array, and then overwriting it in another call before the data contained was consumed by the calling code. However, after a little searching, I realized that this code was only used by my various HLSL Effect wrapping functions and some similar code from the first lighting example that was fundamentally the same. So effectively, with my rendering code being single-threaded, the data in the returned array was only “live” for a couple lines of C# code at most, with no real threat of thread-safety issues, so long as the calling code immediately uploaded the returned data to the GPU or copied it to another array, it seemed as though it should work.

public static class Util { private static byte[] _unmanagedStaging = new byte[1024]; // 1024 is almost certainly overkill public static byte[] GetArray(object o) { Array.Clear(_unmanagedStaging, 0, _unmanagedStaging.Length); var len = Marshal.SizeOf(o); if (len >= _unmanagedStaging.Length) { // only if we need to, resize the static buffer _unmanagedStaging = new byte[len]; } var ptr = Marshal.AllocHGlobal(len); Marshal.StructureToPtr(o, ptr, true); Marshal.Copy(ptr, _unmanagedStaging, 0, len); Marshal.FreeHGlobal(ptr); return _unmanagedStaging; } }

One downside of this change, is that the calling code needs to know the size of the structure passed in to be converted to raw bytes. In my case, this is no real issue, since I only use my own structures, and I know what it was I passed down. I should probably return the actual portion of the returned buffer that is used in an out parameter, but this is good enough for me right now. With this change, my memory growth has shrunk to nearly nothing (I’m still seeing a very slow growth, but it’s pretty negligible, and I need to switch to a different (non-free) profiler to chase down these last bits).

So I solved my problem, but this does seem a little half-baked. I’m not quite certain what the underlying issue with my original approach was (possibly that it lies at the cusp between managed C# code and unmanaged DirectX code?), but for whatever reason, the GC was not working for me, and I’ve had to fall back and implement an incredibly primitive memory management system myself. Oh well… If you’ve got a better idea, I’d love to hear it.