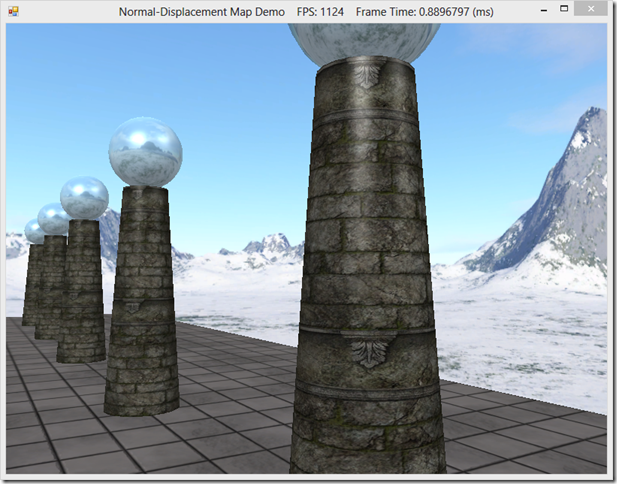

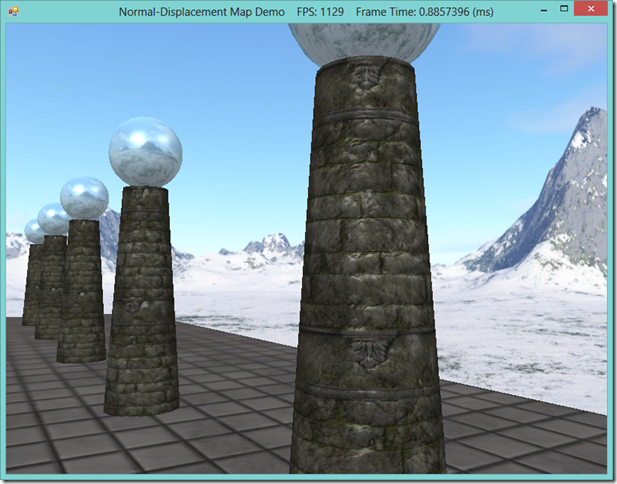

In our last example on normal mapping and displacement mapping, we made use of the new Direct3D 11 tessellation stages when implementing our displacement mapping effect. For the purposes of the example, we did not examine too closely the concepts involved in making use of these new features, namely the Hull and Domain shaders. These new shader types are sufficiently complicated that they deserve a separate treatment of their own, particularly since we will continue to make use of them for more complicated effects in the future.

The Hull and Domain shaders are covered in Chapter 13 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 , which I had previously skipped over. Rather than use the example from that chapter, I am going to use the shader effect we developed for our last example instead, so that we can dive into the details of how the hull and domain shaders work in the context of a useful example that we have some background with.

The primary motivation for using the tessellation stages is to offload work from the the CPU and main memory onto the GPU. We have already looked at a couple of the benefits of this technique in our previous post, but some of the advantages of using the tessellation stages are:

- We can use a lower detail mesh, and specify additional detail using less memory-intensive techniques, like the displacement mapping technique presented earlier, to produce the final, high-quality mesh that is displayed.

- We can adjust the level of detail of a mesh on-the-fly, depending on the distance of the mesh from the camera or other criteria that we define.

- We can perform expensive calculations, like collisions and physics calculations, on the simplified mesh stored in main memory, and still render the highly-detailed generated mesh.

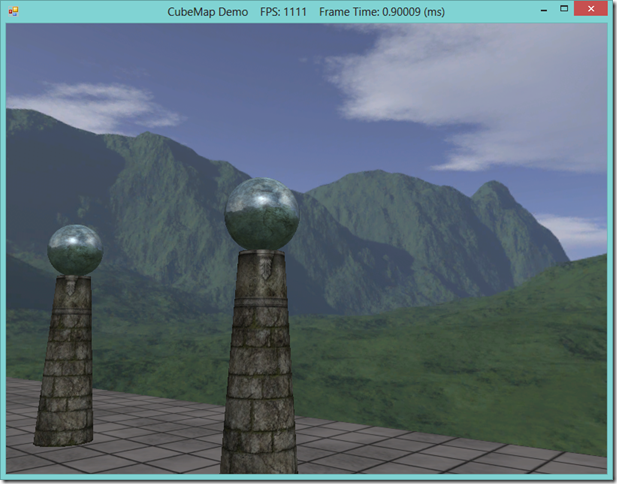

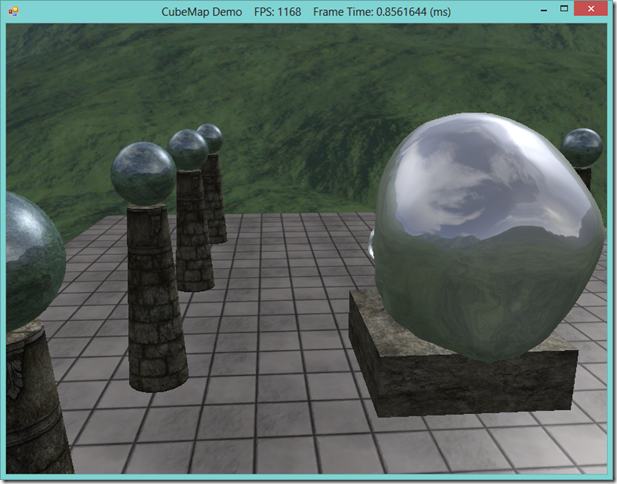

![image_thumb%25255B3%25255D[1] image_thumb%25255B3%25255D[1]](http://lh5.ggpht.com/-GBzUN907tdQ/UjCCDWujhII/AAAAAAAADKo/kZbzYOb3p5I/image_thumb%252525255B3%252525255D%25255B1%25255D_thumb%25255B1%25255D.png?imgmax=800)