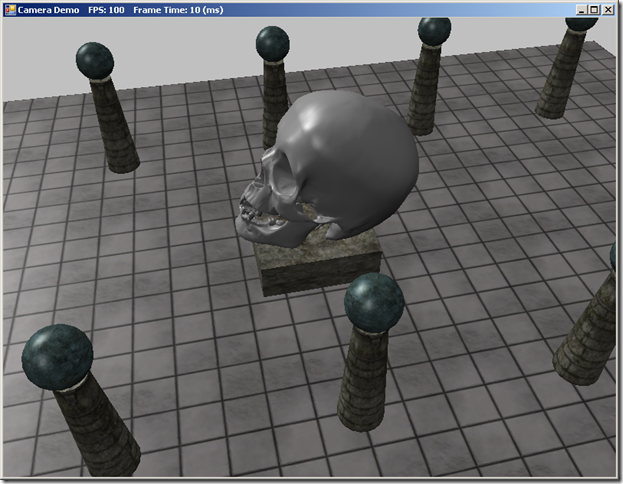

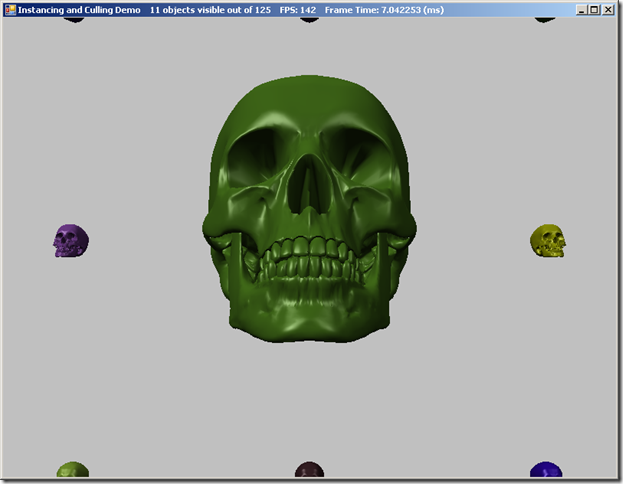

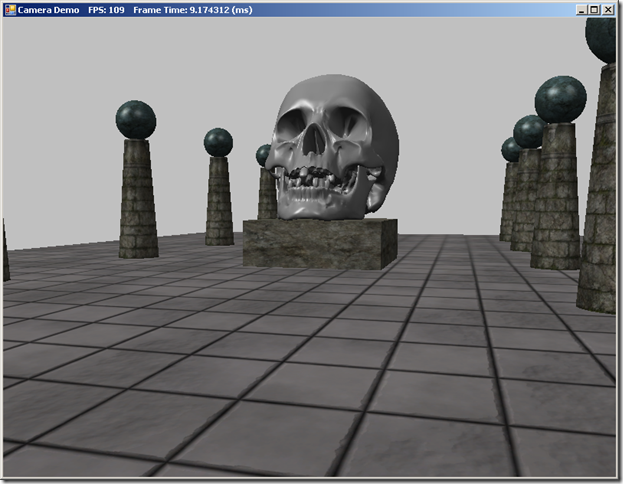

So far, we have only been concerned with drawing a 3D scene to the 2D computer screen, by projecting the 3D positions of objects to the 2D pixels of the screen. Often, you will want to perform the reverse operation; given a pixel on the screen, which object in the 3D scene corresponds to that pixel? Probably the most common application for this kind of transformation is selecting and moving objects in the scene using the mouse, as in most modern real-time strategy games, although the concept has other applications.

The traditional method of performing this kind of object picking relies on a technique called ray-casting. We shoot a ray from the camera position through the selected point on the near-plane of our view frustum, which is obtained by converting the screen pixel location into normalized device coordinates, and then intersect the resulting ray with each object in our scene. The first object intersected by the ray is the object that is “picked.”

The code for this example is based on Chapter 16 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 , with some modifications. You can download the full source from my GitHub repository at https://github.com/ericrrichards/dx11.git, under the PickingDemo project.