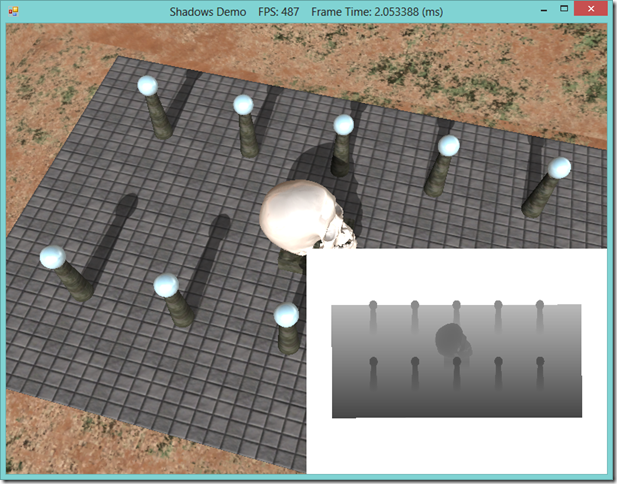

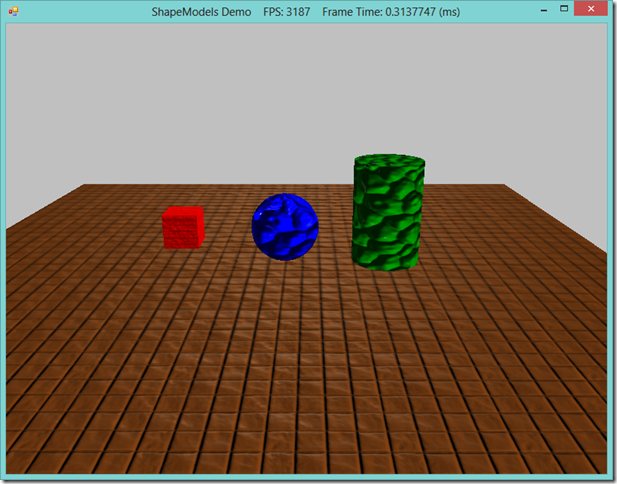

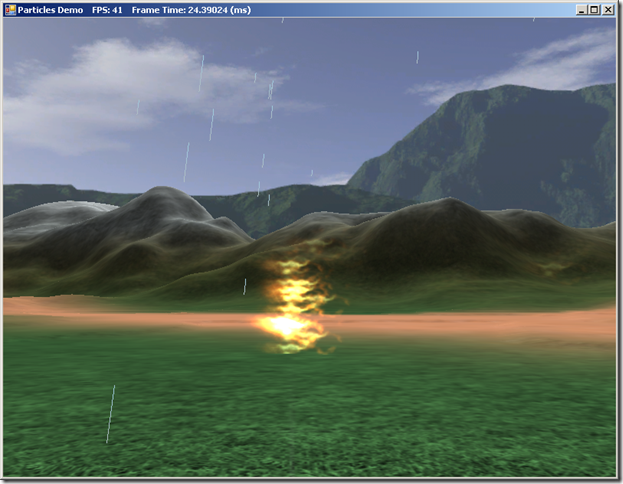

This weekend, I updated my home workstation from Windows 8 to Windows 8.1. Just before doing this, I had done a bunch of work on my SSAO implementation, which I was intending to write up here once I got back from a visit home to do some deer hunting and help my parents get their firewood in. When I got back, I fired up my machine, and loaded up VS to run the SSAO sample to grab some screenshots. Immediately, my demo application crashed, while trying to create the DirectX 11 device. I had done some work over the weekend to downgrade the vertex and pixel shaders in the example to SM4, so that they could run on my laptop, which has an older integrated Intel video card that only supports DX10.1. I figured that I had borked something up in the process, so I tried running some of my other, simpler demos. Same error message popped up; DXGI_ERROR_UNSUPPORTED. Now, I am running a GTX 560 TI, so I know Direct3D 11 should be supported.

However, I have been using Nvidia’s driver update tool to keep myself at the latest and greatest driver version, so I figured that perhaps the latest driver I downloaded had some bugs. Go to Nvidia’s site, check for any updates. Looks like I have the latest driver. Hmm…

So I turned again to google, trying to find some reason why I would suddenly be unable to create a DirectX device. The fourth result I found was this: http://stackoverflow.com/questions/18082080/d3d11-create-device-debug-on-windows-8-1. Apparently I need to download the Windows 8.1 SDK, now. I’m guessing that, since I had VS installed prior to updating, I didn’t get the latest SDK installed, and the Windows 8 SDK, which I did have installed, wouldn’t cut it anymore, at least when trying to create a debug device. So I went ahead and installed the 8.1 SDK from here. Restart VS, rebuild the project in question, and now it runs perfectly. Argh. At least it’s working again; I just wish I didn’t have to waste an hour futzing around with it…