In real-time lighting applications, like games, we usually only calculate direct lighting, i.e. light that originates from a light source and hits an object directly. The Phong lighting model that we have been using thus far is an example of this; we only calculate the direct diffuse and specular lighting. We either ignore indirect light (light that has bounced off of other objects in the scene), or approximate it using a fixed ambient term. This is very fast to calculate, but not terribly physically accurate. Physically accurate lighting models can model these indirect light bounces, but are typically too computationally expensive to use in a real-time application, which needs to render at least 30 frames per second. However, using the ambient lighting term to approximate indirect light has some issues, as you can see in the screenshot below. This depicts our standard skull and columns scene, rendered using only ambient lighting. Because we are using a fixed ambient color, each object is rendered as a solid color, with no definition. Essentially, we are making the assumption that indirect light bounces uniformly onto all surfaces of our objects, which is often not physically accurate.

Naturally, some portions of our scene will receive more indirect light than other portions, if we were actually modeling the way that light bounces within our scene. Some portions of the scene will receive the maximum amount of indirect light, while other portions, such as the nooks and crannies of our skull, should appear darker, since fewer indirect light rays should be able to hit those surfaces because the surrounding geometry would, realistically, block those rays from reaching the surface.

In a classical global illumination scheme, we would simulate indirect light by casting rays from the object surface point in a hemispherical pattern, checking for geometry that would prevent light from reaching the point. Assuming that our models are static, this could be a viable method, provided we performed these calculations off-line; ray tracing is very expensive, since we would need to cast a large number of rays to produce an acceptable result, and performing that many intersection tests can be very expensive. With animated models, this method very quickly becomes untenable; whenever the models in the scene move, we would need to recalculate the occlusion values, which is simply too slow to do in real-time.

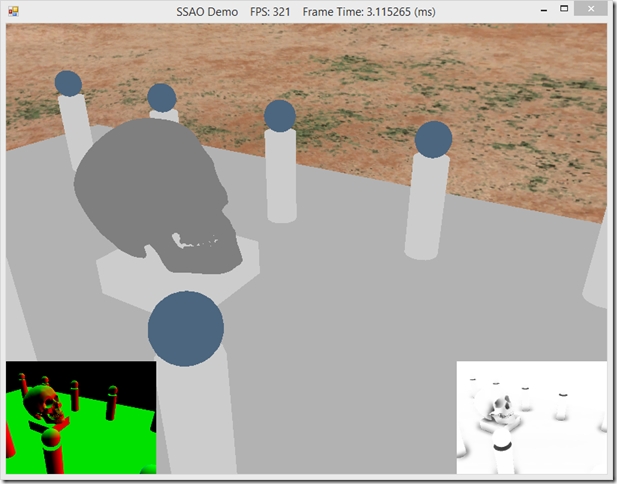

Screen-Space Ambient Occlusion is a fast technique for approximating ambient occlusion, developed by Crytek for the game Crysis. We will initially draw the scene to a render target, which will contain the normal and depth information for each pixel in the scene. Then, we can sample this normal/depth surface to calculate occlusion values for each pixel, which we will save to another render target. Finally, in our usual shader effect, we can sample this occlusion map to modify the ambient term in our lighting calculation. While this method is not perfectly realistic, it is very fast, and generally produces good results. As you can see in the screen shot below, using SSAO darkens up the cavities of the skull and around the bases of the columns and spheres, providing some sense of depth.

The code for this example is based on Chapter 22 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0 . The example presented here has been stripped down considerably to demonstrate only the SSAO effects; lighting and texturing have been disabled, and the shadow mapping effects in Luna’s example have been removed. The full code for this example can be found at my GitHub repository, https://github.com/ericrrichards/dx11.git, under the SSAODemo2 project. A more faithful adaptation of Luna’s example can also be found in the 28-SsaoDemo project.