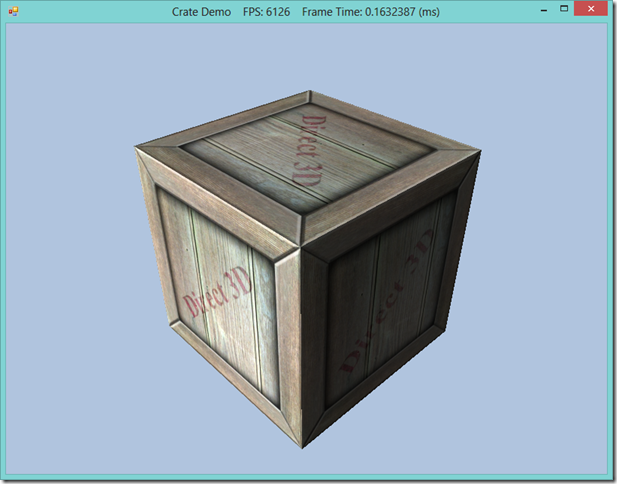

Texturing 101–Crate Demo

This time around, we are going to begin with a simple texturing example. We’ll draw a simple cube, and apply a crate-style texture to it. We’ll need to make some changes to our Basic.fx shader code, as well as the C# wrapper class, BasicEffect. Lastly, we’ll need to create a new vertex structure, which will contain, in addition to the position and normal information we have been using, a uv texture coordinate. If you are following along in Mr. Luna’s book , this would be Chapter 8, the Crate Demo. You can see my full code for the demo at https://github.com/ericrrichards/dx11.git, under DX11/CrateDemo.

First, let’s define our new vertex structure. As I mentioned, this will now contain two Vector3’s and a Vector2, for the position, normal, and uv texture coordinates, which gives us a stride of 32 bytes.

[StructLayout(LayoutKind.Sequential)] public struct Basic32 { public Vector3 Position; public Vector3 Normal; public Vector2 Tex; public Basic32(Vector3 position, Vector3 normal, Vector2 texC) { Position = position; Normal = normal; Tex = texC; } public static readonly int Stride = Marshal.SizeOf(typeof(Basic32)); }

We will also need to add this new vertex format to our global InputLayouts class. I’ve had to add a little more work to the InitAll function, as I want to be able to support both this updated version of Basic.fx, which supports texturing, without breaking or having to rewrite our previous lighting examples. When you run this CrateDemo, you should see a message logged to the console complaining that the PosNormal InputElement[] does not have a TEXCOORD element defined.

public class InputLayoutDescriptions { public static readonly InputElement[] PosNormal = { new InputElement("POSITION", 0, Format.R32G32B32_Float, 0, 0, InputClassification.PerVertexData, 0), new InputElement("NORMAL", 0, Format.R32G32B32_Float, 12, 0, InputClassification.PerVertexData, 0), }; public static readonly InputElement[] Basic32 = { new InputElement("POSITION", 0, Format.R32G32B32_Float, 0, 0, InputClassification.PerVertexData, 0), new InputElement("NORMAL", 0, Format.R32G32B32_Float, 12, 0, InputClassification.PerVertexData, 0), new InputElement("TEXCOORD", 0, Format.R32G32_Float, 24, 0, InputClassification.PerVertexData, 0), }; } public class InputLayouts { public static void InitAll(Device device) { var passDesc = Effects.BasicFX.Light1Tech.GetPassByIndex(0).Description; try { PosNormal = new InputLayout(device, passDesc.Signature, InputLayoutDescriptions.PosNormal); } catch (Direct3D11Exception dex) { Console.WriteLine(dex.Message); PosNormal = null; } try { Basic32 = new InputLayout(device, passDesc.Signature, InputLayoutDescriptions.Basic32); } catch (Direct3D11Exception dex) { Console.WriteLine(dex.Message); Basic32 = null; } } public static void DestroyAll() { Util.ReleaseCom( ref PosNormal); Util.ReleaseCom(ref Basic32); } public static InputLayout PosNormal; public static InputLayout Basic32; }

Updated Basic.fx

We will need to add a couple of new shader constants to our Basic.fx file. First, we will add a Texture2D element called gDiffuseMap. We have to add this variable outside of our constant buffers. I would imagine that this is because our texture, which is essentially just a 2D array of 4D vectors, can be variably sized, depending on the dimensions of the texture we upload, and our constant buffers are fixed size. Next, we will add an additional per-object matrix, gTexTransform, which we will use to transform the texture coordinates of our vertices in the vertex shader. In this way, we can animate the texture mapped onto our triangles, or scale our textures to tile more effectively based on view distance, for instance. We also need to define a SamplerState, which will control how we sample a texel from our diffuse map in our pixel shader. There are a large number of options and different combinations that you may use (see D3D11_SAMPLER_DESC for discussion of the options), but we will be using anisotropic filtering, and specifying an address mode that will repeat our texture if the coordinates exceed the [0,1] range.

cbuffer cbPerObject

{

// Previous per-object constants omitted

float4x4 gTexTransform;

};

// Nonnumeric values cannot be added to a cbuffer.

Texture2D gDiffuseMap;

SamplerState samAnisotropic

{

Filter = ANISOTROPIC;

MaxAnisotropy = 4;

AddressU = WRAP;

AddressV = WRAP;

};

Shader

VertexOut VS(VertexIn vin)

{

VertexOut vout;

// Transform position and normal as before...

// Output vertex attributes for interpolation across triangle.

vout.Tex = mul(float4(vin.Tex, 0.0f, 1.0f), gTexTransform).xy;

return vout;

}

float4 PS(VertexOut pin, uniform int gLightCount, uniform bool gUseTexure) : SV_Target

{

// Calculate to-eye vector as before...

// Default to multiplicative identity.

float4 texColor = float4(1, 1, 1, 1);

if(gUseTexure)

{

// Sample texture.

texColor = gDiffuseMap.Sample( samAnisotropic, pin.Tex );

}

//

// Lighting.

//

float4 litColor = texColor;

if( gLightCount > 0 )

{

// Sum the light contribution from each light source, as before...

// Modulate with late add.

litColor = texColor*(ambient + diffuse) + spec;

}

// Common to take alpha from diffuse material and texture.

litColor.a = gMaterial.Diffuse.a * texColor.a;

return litColor;

}

Updating BasicEffect

To reflect the changes we have made to Basic.fx, we will need to add some additional EffectVariables to our BasicEffect class, along with setter functions for the new variables. We’ll also add handles to the new lit & textured techniques that we have added to our effect. The new member variables and their setters are:

public EffectTechnique Light0TexTech; public EffectTechnique Light1TexTech; public EffectTechnique Light2TexTech; public EffectTechnique Light3TexTech; private EffectResourceVariable DiffuseMap; private EffectMatrixVariable TexTransform; public void SetTexTransform(Matrix m) { TexTransform.SetMatrix(m); } public void SetDiffuseMap(ShaderResourceView tex) { DiffuseMap.SetResource(tex); }

Crate Demo

The actual implementation of the CrateDemo is very similar to our original BoxDemo. We will need to set up our lights and the material for our crate, so that we can properly light the scene, but that should be easy to understand after the last two lighting demos. Building the geometry for our crate should also be very understandable, as we will make use of our GeometryGenerator class, which has a function to create a 3D box with position, normal and texture coordinates for us already. In fact, the only new element comes in our Init() function, where we need to load the texture that we wish to apply into a ShaderResourceView, which can be bound to our gDiffuseMap shader texture. DX11 makes this very easy to do, using the D3DX11CreateShaderResourceViewFromFile() function, which is wrapped in SlimDX as the static factory method ShaderResourceView.FromFile().

public override bool Init() { if (!base.Init()) return false; Effects.InitAll(Device); _fx = Effects.BasicFX; InputLayouts.InitAll(Device); _diffuseMapSRV = ShaderResourceView.FromFile(Device, "Textures/WoodCrate01.dds") ; BuildGeometryBuffers(); return true; }

To draw our crate, we follow the same procedure as before, being sure to set our new shader variables before we render the box, and voila, we have a nice textured crate, just waiting for somebody to smash it open with a crowbar to get at the health or ammo powerup inside…

Next time…

We’ll return to our old friend, the Hills/Waves demo, and add some textures to our land and water meshes. We’ll play with some different effects using the texture coordinate transform matrix that we mentioned earlier to implement tiling and animation.